The topic of mining centralization has been a very important one over the past few weeks. GHASH.io, the Bitcoin network’s largest mining pool, has for the past month directed over 40% of the Bitcoin network’s hashpower, and two weeks ago briefly spiked over 50%, theoretically giving it monopoly control over the Bitcoin network. Although miners quidkly left the pool and reduced its hashpower to 35%, it’s clear that the problem is not solved. At the same time, ASICs threaten to further centralize the very production . One approach to solving the problem is the one I advocated in my previous post: create a mining algorithm that is guaranteed to remain CPU-friendly in the long term. Another, however, is to abolish mining entirely, and replace it with a new model for seeking consensus.

The primary second contender to date has been a strategy called “proof of stake”, the intuition behind which is as follows. In a traditional proof-of-work blockchain, miners “vote” on which transactions came at what time with their CPU power, and the more CPU power you have the proportionately larger your influence is. In proof-of-stake, the system follows a similar but different paradigm: stakeholders vote with their dollars (or rather, the internal currency of the particular system). In terms of how this works technically, the simplest setup is a model that has been called the “simulated mining rig”: essentially, every account has a certain chance per second of generating a valid block, much like a piece of mining hardware, and this chance is proportional to the account’s balance. The simplest formula for this is:

SHA256(prevhash + address + timestamp) <= 2^256 * balance / diff

prevhash is the hash of the previous block, address is the address of the stake-miner, timestamp is the current Unix time in second, balance is the account balance of the stack-miner and diff is an adjustable global difficulty parameter. If a given account satisfies this equation at any particular second, it may produce a valid block, giving that account some block reward.

Another approach is to use not the balance, but the “coin age” (ie. the balance multiplied by the amount of time that the coins have not been touched), as the weighting factor; this guarantees more even returns but at the expense of potentially much easier collusion attacks, since attackers have the ability to accumulate coin age, and possible superlinearity; for these reasons, I prefer the plain balance-based approach in most cases, and we will use this as our baseline for the rest of this discussion.

Other solutions to “proof of X” have been proposed, including excellence, bandwidth, storage and identity, but none are particularly convenient as consensus algorithms; rather, all of these systems have many of the same properties of proof of stake, and are thus best implemented indirectly – by making them purely mechanisms for currency distribution, and then using proof of stake on those distributed coins for the actual consensus. The only exception is perhaps the social-graph-theory based Ripple, although many cryptocurrency proponents consider such systems to be far too trust-dependent in order to be considered truly “decentralized”; this point can be debated, but it is best to focus on one topic at a time and so we will focus on stake.

Strengths and Weaknesses

If it can be implemented correctly, in theory proof of stake has many advantages. In particular are three:

- It does not waste any significant amount of electicity. Sure, there is a need for stakeholders to keep trying to produce blocks, but no one gains any benefit from making more than one attempt per account per second; hence, the electricity expenditure is comparable to any other non-wasteful internet protocol (eg. BitTorrent)

- It can arguably provide a much higher level of security. In proof of work, assuming a liquid market for computing power the cost of launching a 51% attack is equal to the cost of the computing power of the network over the course of two hours – an amount that, by standard economic principles, is roughly equal to the total sum of block rewards and transaction fees provided in two hours. In proof of stake, the threshold is theoretically much higher: 51% of the entire supply of the currency.

- Depending on the precise algorithm in question it can potentially allow for much faster blockchains (eg. NXT has one block every few seconds, compared to one per minute for Ethereum and one per ten minutes for Bitcoin)

Note that there is one important counterargument that has been made to #2: if a large entity credibly commits to purchasing 51% of currency units and then using those funds to repeatedly sabotage the network, then the price will fall drastically, making it much easier for that entity to puchase the tokens. This does somewhat mitigate the benefit of stake, although not nearly fatally; an entity that can credibly commit to purchasing 50% of coins is likely also one that can launch 51% attacks against proof of work.

However, with the naive proof of stake algorithm described above, there is one serious problem: as some Bitcoin developers describe it, “there is nothing at stake”. What that means is this: in the context of a proof-of-work blockchain, if there is an accidental fork, or a deliberate transaction reversal (“double-spend”) attempt, and there are two competing forks of the blockchain, then miners have to choose which one they contribute to. Their three choices are either:

- Mine on no chain and get no rewards

- Mine on chain A and get the reward if chain A wins

- Mine on chain B and get the reward if chain B wins

As I have commented in a previous post, note the striking similarity to SchellingCoin/Truthcoin here: you win if you go with what everyone else goes with, except in this case the vote is on the order of transactions, not a numerical (as in SchellingCoin) or binary (as in TruthCoin) datum. The incentive is to support the chain that everyone else supports, forcing rapid convergence, and preventing successful attacks provided that at least 51% of the network is not colluding.

In the naive proof of stake algorithm, on the other hand, the choices of whether or not to vote on A and whether or not to vote on B are independent; hence, the optimal strategy is to mine on any fork that you can find. Thus, in order to launch a successful attack, an attacker need only overpower all of the altruists who are willing to vote only on the correct chain.

The problem is, unfortunately, somewhat fundamental. Proof of work is nice because the property of hash verification allows the network to be aware of something outside of itself – namely, computing power, and that thing serves as a sort of anchor to ensure some stability. In a naive proof of stake system, however, the only thing that each chain is aware of is itself; hence, one can intuitively see that this makes such systems more flimsy and less stable. However, the above is merely an intuitive argument; it is by no means a mathematical proof that a proof-of-stake system cannot be incentive-compatible and secure, and indeed there are a number of potential ways to get around the issue.

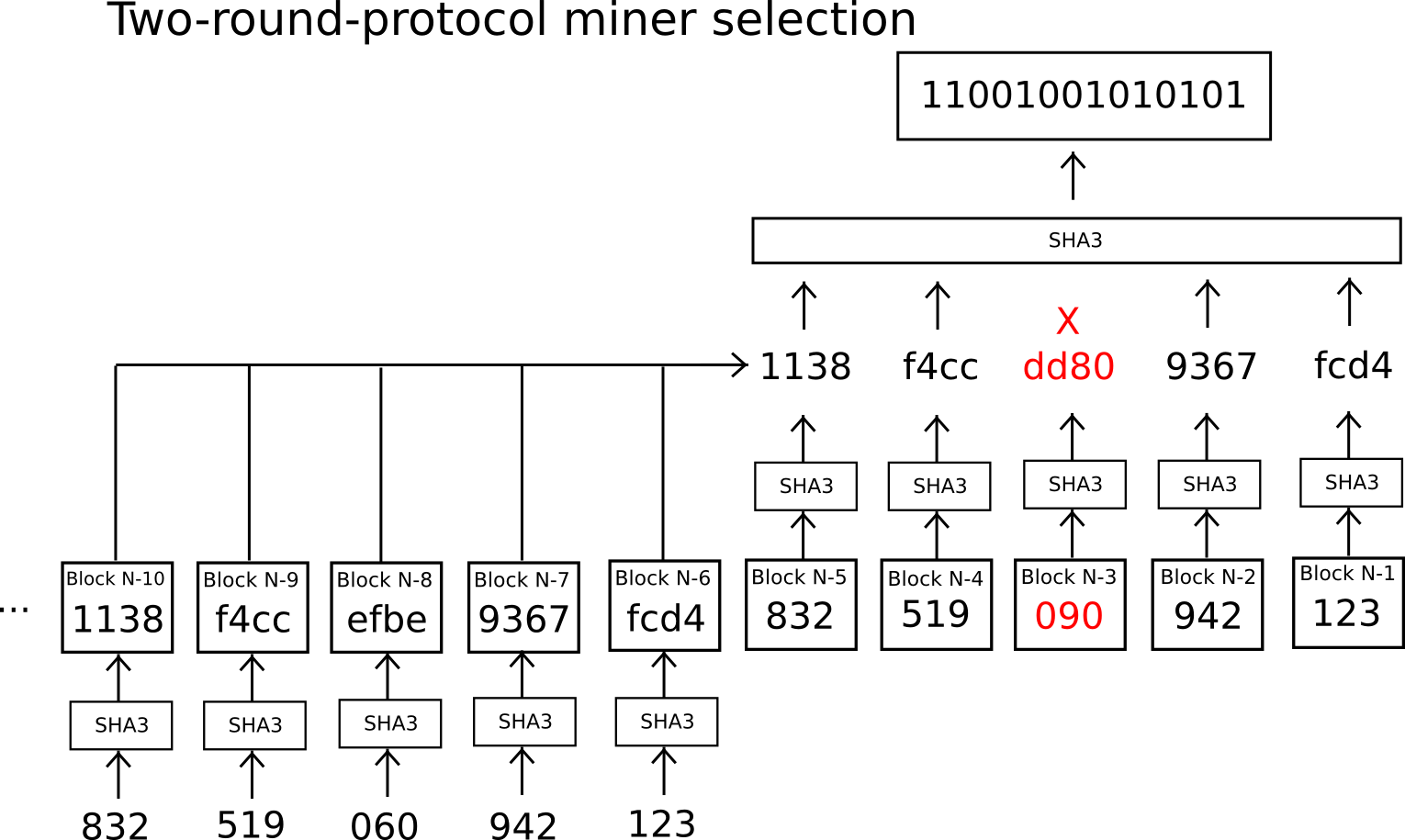

The first strategy is the one that is employed in the Slasher algorithm, and it hinges on a simple realization: although, in the case of a fork, chains are not aware of anything in the outside world, they are aware of each other. Hence, the way the protocol prevents double-mining is this: if you mine a block, the reward is locked up for 1000 blocks, and if you also mine on any other chain then anyone else can submit the block from the other chain into the original chain in order to steal the mining reward. Note, however, that things are not quite so simple, and there is one catch: the miners have to be known in advance. The problem is that if the algorithm given above is used directly, then the issue arises that, using a probabilistic strategy, double mining becomes very easy to hide.

The issue is this: suppose that you have 1% stake, and thus every block there is a 1% chance that you will be able to produce (hereinafter, “sign”) it. Now, suppose there is a fork between chain A and chain B, with chain A being the “correct” chain. The “honest” strategy is to try to generate blocks just on A, getting an expected 0.01 A-coins per block. An alternative strategy, however, is to try to generate blocks on both A and B, and if you find a block on both at the same time then discarding B. The payout per block is one A-coin if you get lucky on A (0.99% chance), one B-coin if you get lucky on B (0.99% chance) and one A-coin, but no B-coins, if you get lucky on both; hence, the expected payout is 0.01 A-coins plus 0.0099 B-coins if you double-vote. If the stakeholders that need to sign a particular block are decided in advance, however (ie. specifically, decided before a fork starts), then there is no possibility of having the opportunity to vote on A but not B; you either have the opportunity on both or neither. Hence, the “dishonest” strategy simply collapses into being the same thing as the “honest” strategy.

The Block Signer Selection Problem

But then if block signers are decided in advance, another issue arises: if done wrong, block signers could “mine” their blocks, repeatedly trying to create a block with different random data until the resulting block triggers that same signer having the privilege to sign a block again very soon. For example, if the signer for block N+1000 was simply chosen from the hash of block N, and an attacker had 1% stake, then the attacker could keep rebuilding the block until block N+1000 also had the attacker as its signer (ie. an expected 100 iterations). Over time, the attacker would naturally gain signing privilege on other blocks, and thus eventually come to completely saturate the blockchain with length-1000 cycles controlled by himself. Even if the hash of 100 blocks put together is used, it’s possible to manipulate the value. Thus, the question is, how do we determine what the signers for future blocks are going to be?

The solution used in Slasher is to use a secure decentralized random number generator protocol: many parties come in, first submit to the blockchain the hashes of their values, and then submit their values. There is no chance of manipulation this way, because each submitter is bound to submit in the second round the value whose hash they provided in the first round, and in the first round no one has enough information in order to engage in any manipulation. The player still has a choice of whether or not to participate in the second round, but the two countervailing points are that (1) this is only one bit of freedom, although it becomes greater for large miners that can control multiple accounts, and (2) we can institute a rule that failing to participate causes forfeiture of one’s mining privilege (miners in round N choose miners for round N+1 during round N-1, so there is an opportunity to do this if certain round-N miners misbehave during this selection step).

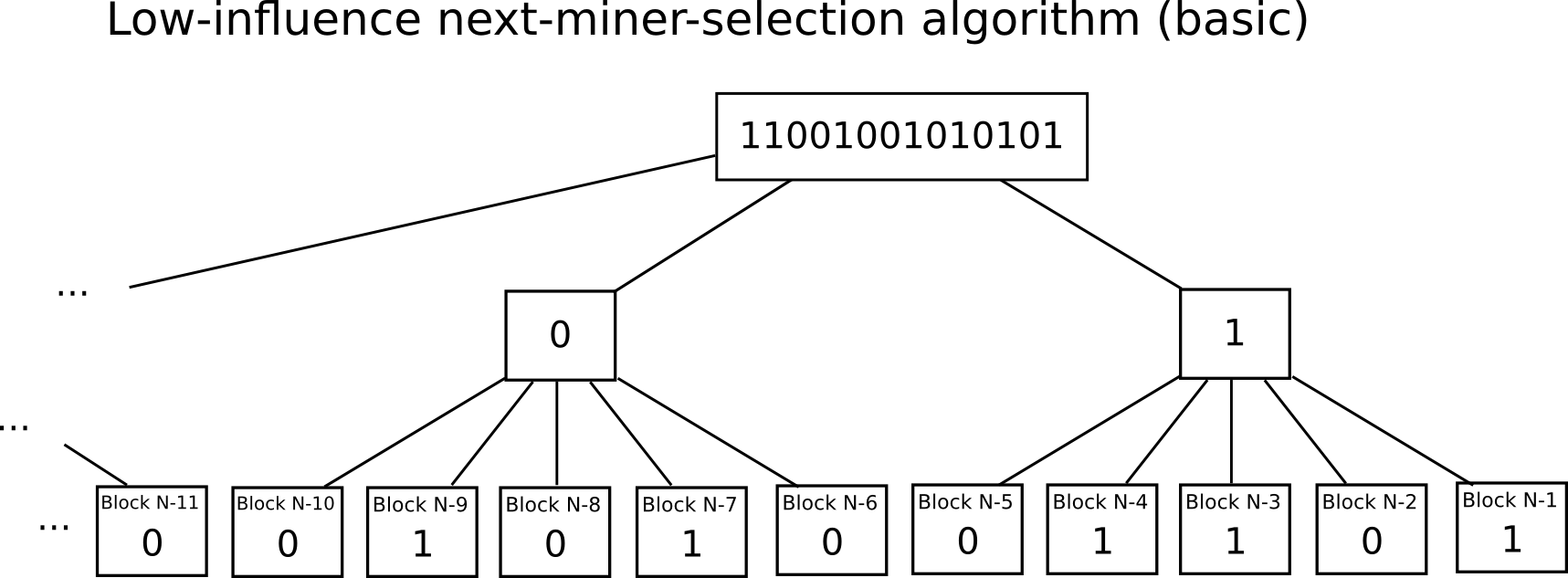

Another idea, proposed by Iddo Bentov and others in their “Cryptocurrencies Without Proof of Work” paper, is to use something called a “low-influence” function – essentially, a function such that there is only a very low chance that a single actor will be able to change the result by changing the input. A simple example of an LIF over small sets is majority rule; here, because we are trying to pick a random miner, we have a very large set of options to choose from, so majority rule per bit is used (eg. if you have 500 parties and you want to pick a random miner out of a billion, assign them into thirty groups of 17, and have each group vote on whether their particular bit is zero or one, and then recombine the bits as a binary number at the end). This removes the need for a complicated two-step protocol, allowing it to potentially be done much more quickly and even in parallel, reducing the risk that the pre-chosen stake-miners for some particular block will get together and collude.

A third interesting strategy, used by NXT, is to use the addresses of the stake-miners for blocks N and N+1 to choose the miner for block N+2; this by definition gives only one choice for the next miner in each block. Adding a criterion that every miner needs to be locked in for 1440 blocks in order to participate prevents sending transactions as a form of double-mining. However, having such rapid stake-miner selection also compromises the nothing-at-stake resistance property due to the probabilistic double-mining problem; this is the reason why clever schemes to make miner determination happen very quickly are ultimately, beyond a certain point, undesirable.

Long-Range Attacks

While the Slasher approach does effectively solve the nothing-at-stake problem against traditional 51% attacks, a problem arises in the case of something called a “long-range attack”: instead of an attacker starting mining from ten blocks before the current block, the attacker starts ten thousand blocks ago. In a proof-of-work context, this is silly; it basically means doing thousands of times as much work as is necessary to launch an attack. Here, however, creating a block is nearly computationally free, so it’s a reasonable strategy. The reason why it works is that Slasher’s process for punishing multi-mining only lasts for 1000 blocks, and its process for determining new miners lasts 3000 blocks, so outside the “scope” of that range Slasher functions exactly like the naive proof-of-stake coin. Note that Slasher is still a substantial improvement; in fact, assuming users never change it can be made fully secure by introducing a rule into each client not to accept forks going back more than 1000 blocks. The problem is, however, what happens when a new user enters the picture.

When a new user downloads a proof-of-stake-coin client for the first time, it will see multiple versions of the blockchain: the longest, and therefore legitimate, fork, and many pretenders trying to mine their own chains from the genesis. As described above, proof-of-stake chains are completely self-referential; hence, the client seeing all of these chains has no idea about any surrounding context like which chain came first or which has more value (note: in a hybrid proof-of-stake plus social graph system, the user would get initial blockchain data from a trusted source; this approach is reasonable, but is not fully decentralized). The only thing that the client can see is the allocation in the genesis block, and all of the transactions since that point. Thus, all “pure” proof-of-stake systems are ultimately permanent nobilities where the members of the genesis block allocation always have the ultimate say. No matter what happens ten million blocks down the road, the genesis block members can always come together and launch an alternate fork with an alternate transaction history and have that fork take over.

If you understand this, and you are still okay with pure proof of stake as a concept (the specific reason why you might still be okay is that, if the initial issuance is done right, the “nobility” should still be large enough that it cannot practically collude), then the realization allows for some more imaginative directions in terms of how proof of stake can play out. The simplest idea is to have the members of the genesis block vote on every block, where double-mining is punished by permanent loss of voting power. Note that this system actually solves nothing-at-stake issues completely, since every genesis block holder has a mining privilege that has value forever into the future, so it will always not be worth it to double-mine. This system, however, has a finite lifespan – specifically, the maximum life (and interest) span of the genesis signers, and it also gives the nobility a permanent profit-making privilege, and not just voting rights; however, nevertheless the existence of the algorithm is encouraging because it suggests that long-range-nothing-at-stake might be fundamentally resolvable. Thus, the challenge is to figure out some way to make sure voting privileges transfer over, while still at the same time maintaining security.

Changing Incentives

Another approach to solving nothing-at-stake comes at the problem from a completely different angle. The core problem is, in naive proof-of-stake, rational individuals will double-vote. The Slasher-like solutions all try to solve the problem by making it impossible to double-vote, or at the very least heavily punishing such a strategy. But what if there is another approach; specifically, what if we instead remove the incentive to do so? In all of the proof of stake systems that I described above, the incentive is obvious, and unfortunately fundamental: because whoever is producing blocks needs an incentive to participate in the process, they benefit if they include a block in as many forks as possible. The solution to this conundrum comes from an imaginative, out-of-the-box proposal from Daniel Larimer: transactions as proof of stake.

The core idea behind transactions as proof-of-stake is simple: instead of mining being done by a separate class of individuals, whether computer hardware owners or stakeholders, mining and transaction sending are merged into one. The naive TaPoS algorithm is as follows:

- Every transaction must contain a reference (ie. hash) to the previous transaction

- A candidate state-of-the-system is obtained by calculating the result of a resulting transaction chain

- The correct chain among multiple candidates is the one that has either (i) the longest coin-days-destroyed (ie. number of coins in the account * time since last access), or (ii) the highest transaction fees (these are two different options that we will analyze separately)

This algorithm has the property that it is extremely unscalable, breaking down beyond about 1 transaction per 2-5 seconds, and it is not the one that Larimer suggests or the one that will actually be used; rather, it’s simply a proof of concept that we will analyze to see if this approach is valid at all. If it is, then there are likely ways to optimize it.

Now, let’s see what the economics of this are. Suppose that there is a fork, and there are two competing versions of the TaPoS chain. You, as a transaction sender, made a transaction on chain A, and there is now an upcoming chain B. Do you have the incentive to double-mine and include your transaction in chain B as well? The answer is no – in fact you actually want to double-spend your recipient so you would not put the transaction on another chain. This argument is especially potent in the case of long-range attacks, where you already received your product in exchange for the funds; in the short term, of course, the incentive still exists to make sure the transaction is sent, so senders do have the incentive to double-mine; however, because the worry is strictly time-limited this can be resolved via a Slasher-like mechanism.

One concern is this: given the presence of forks, how easy is it to overwhelm the system? If, for example, there is a fork, and one particular entity wants to double-spend, under what circumstances is that possible? In the transaction-fee version, the requirement is pretty simple: you need to spend more txfees than the rest of the network. This seems weak, but in reality it isn’t; we know that in the case of Bitcoin, once the currency supply stops increasing mining will rely solely on transaction fees, and the mechanics are exactly the same (since the amount that the network will spend on mining will roughly correspond to the total number of txfees being sent in); hence, fee-based TaPoS is in this regard at least as secure as fee-only PoW mining. In the second case, we have a different model: instead of mining with your coins, you are mining with your liquidity. Anyone can 51% attack the system if and only if they have a sufficiently large quantity of coin-days-destroyed on them. Hence, the cost of spending a large txfee after the fact is replaced by the cost of sacrificing liquidity before the fact.

Cost of Liquidity

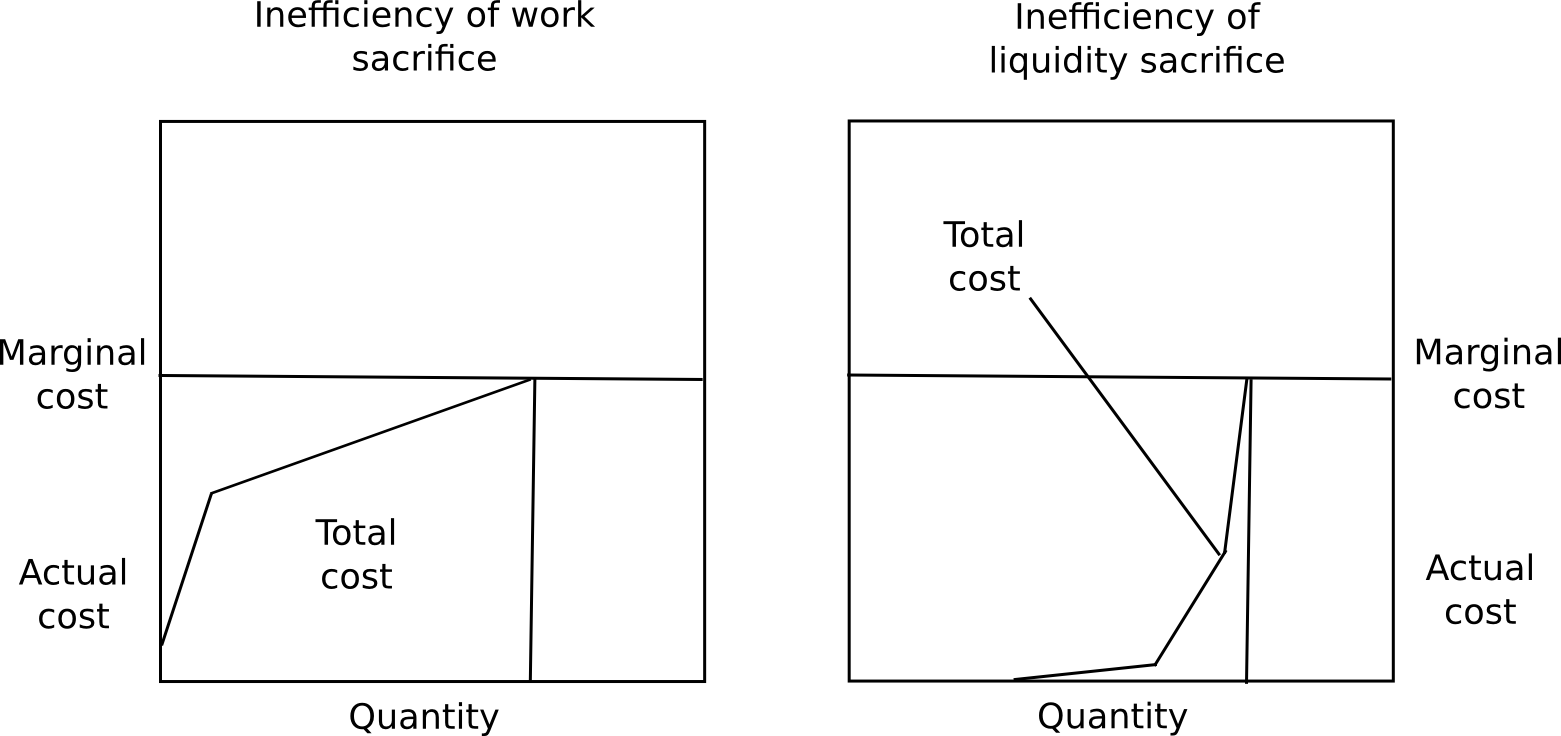

The discussion around liquidity leads to another important philosophical point: security cannot be cost-free. In any system where there is a block reward, the thing that is the prerequisite for the reward (whether CPU, stake, or something else) cannot be free, since otherwise everyone would be claiming the reward at infinitum, and in TaPoS transaction senders need to be providing some kind of fee to justify security. Furthermore, whatever resource is used to back the security, whether CPU, currency sacrifices or liquidity sacrifices, the attacker need only get their hands on the same quantity of that resource than the rest of the network. Note that, in the case of liquidity sacrifices (which is what naive proof of stake is), the relevant quantity here is actually not 50% of coins, but rather the privilege of accessing 50% of coins for a few hours – a service that, assuming a perfectly efficient market, might only cost a few hundred thousand dollars.

The solution to this puzzle is that marginal cost is not the same thing as average cost. In the case of proof of work, this is true only to a very limited extent; although miners do earn a positive nonzero profit from mining, they all pay a high cost (unless they’re CPU miners heating their homes, but even there there are substantial efficiency losses; laptops running hash functions at 100%, though effective at heating, are necessarily less effective than systems designed for the task). In the case of currency sacrifices, everyone pays the same, but the payment is redistributed as a dividend to everyone else, and this profit is too dispersed to be recovered via market mechanisms; thus, although the system is costly from a local perspective, it is costless from a global perspective.

The last option, liquidity sacrifice, is in between the two. Although liquidity sacrifice is costly, there is a substantial amount of disparity in how much people value liquidity. Some people, like individual users or businesses with low savings, heavily value liquidity; others, like savers, do not value liquidity at all (eg. I could not care less if I lost the ability to spend ten of my bitcoins for some duration). Hence, although the marginal cost of liquidity will be high (specifically, necessarily equal to either the mining reward or the transaction fee), the average cost is much lower. Hence, there is a leverage effect that allows the cost of an attack to be much higher than the inefficiency of the network, or the amount that senders spend on txfees. Additionally, note that in Larimer’s scheme specifically, things are rigged in such a a way that all liquidity that is sacrificed in consensus is liquidity that was being sacrificed anyway (namely, by not sending coins earlier), so the practical level of inefficiency is zero.

Now, TaPoS does have its problems. First, if we try to make it more scalable by reintroducing the concept of blocks, then there ideally needs to be some reason to produce blocks that is not profit, so as not to reintroduce the nothing-at-stake problem. One approach may be to force a certain class of large transaction senders to create blocks. Second, attacking a chain is still theoretically “cost-free”, so the security assurances are somewhat less nice than they are in proof-of-work. Third, in the context of a more complicated blockchain like Ethereum, and not a currency, some transactions (eg. finalizing a bet) are actually profitable to send, so there will be incentive to double-mine on at least some transactions (though not nearly all, so there is still some security). Finally, it’s a genesis-block-nobility system, just like all proof-of-stake necessarily is. However, as far as pure proof-of-stake systems go, it does seem a much better backbone than the version of proof of stake that emulated Bitcoin mining.

Hybrid Proof of Stake

Given the attractiveness of proof of stake as a solution for increasing efficiency and security, and its simultaneous deficiencies in terms of zero-cost attacks, one moderate solution that has been brought up many times is hybrid proof of stake, in its latest incantation called “proof of activity“. The idea behind proof of activity is simple: blocks are produced via proof of work, but every block randomly assigns three stakeholders that need to sign it. The next block can only be valid once those signatures are in place. In this system, in theory, an attacker with 10% stake would see 999 of his 1000 blocks not being signed, whereas in the legitimate network 729 out of 1000 blocks would be signed; hence, such an attacker would be penalized in mining by a factor of 729.

However, there is a problem: what motivates signers to sign blocks on only one chain? If the arguments against pure proof of stake are correct, then most rational stake-miners would sign both chains. Hence, in hybrid PoS, if the attacker signs only his chain, and altruists only sign the legitimate chain, and everyone else signs both, then if the attacker can overpower the altruists on the stake front that means that the attacker can overtake the chain with less than a 51% attack on the mining front. If we trust that altruists as a group are more powerful in stake than any attacker, but we don’t trust that too much, then hybrid PoS seems like a reasonable hedge option; however, given the reasoning above, if we want to hybridize one might ask if hybrid PoW + TaPoS might not be the more optimal way to go. For example, one could imagine a system where transactions need to reference recent blocks, and a blockchain’s score is calculated based on proof of work and coin-days-destroyed counts.

Conclusion

Will we see proof of stake emerge as a viable alternative to proof of work in the next few years? It may well be. From a pure efficiency perspective, if Bitcoin, or Ethereum, or any other PoW-based platform get to the point where they have similar market cap to gold, silver, the USD, EUR or CNY, or any other mainstream asset, then over a hundred billion dollars worth of new currency units will be produced per year. Under a pure-PoW regime, an amount of economic power approaching that will be spent on hashing every year. Thus, the cost to society of maintaining a proof-of-work cryptocurrency is about the same as the cost of maintaining the Russian military (the analogy is particularly potent because militaries are also proof of work; their only value to anyone is protecting against other militaries). Under hybrid-PoS, that might safely be dropped to $30 billion per year, and under pure PoS it would be almost nothing, except depending on implementation maybe a few billion dollars of cost from lost liquidity.

Ultimately, this boils down to a philosophical question: exactly how much does decentralization mean to us, and how much are we willing to pay for it? Remember that centralized databases, and even quasi-centralized ones based on Ripple consensus, are free. If perfect decentralization is indeed worth 100 billion price tag, then we should just centralize and let a few governments run the databases. But if we have a solid, viable proof of stake algorithm, then we have a third option: a system which is both decentralized and cost-free (note that useful proof of work also fits this criterion, and may be easier); in that case, the dichotomy does not exist at all and decentralization becomes the obvious choice.